Project Intro

Group Major project (3 members) at MSc & MA Innovation Design Engineering, Royal College of Art & Imperial College London

Keywords: Inclusive Design; Wearable Devices; Natural User Interface; Spatial Computing.

Method & Tools: Human-Centered Design; EXP; Unity; C#; Sensor Fusion; HoloLens Development.

Duration: 4 months.

INSTITUTIONS:

COLLABORATION:

HONOURS:

Problem Statement

#Near Future

Mixed Reality

Internet of Things

Over the last few years, the development of the Mixed Reality (MR) and the Internet of Things (IoT) has revealed the possibility of a screen-free future, marked we are entering an era of ubiquitous computing. The user interface starts shifting from the touch screens to the surrounding environment. The interaction between human and machine will change a lot. And more spatial interaction will happen.

#Trend of Interaction

Ref: Microsoft

Ref: Google

As we are entering the future of spatial computing, in which we will be surrounded by interfaces, the embodied interaction naturally becomes a reasonable direction, especially hand-gesture control.

However, one of the challenges comes with it will be accessibility: As the dimension of interface increasing, the accessibility of it decrease as it requires more mobility of users:

And when we think about hand-gesture control particularly, as a mainstream interaction method. Can a world drive by hand-gesture be inclusive enough?

How about the people with disability?

Imagine how could they be included in the spatial interaction

Method and Approach

#Experiments

Disabilities are often highly individual, which leads to a lack of generality in inclusive design. For better adoption, a large number of inclusive design projects are aiming at smaller groups of people, which makes a high amount of different systems had to be designed for users with diverse conditions. By contrast, if a system with an immutable form or interactive model designed to adapt to people with different characteristics, it could be hard to maintain the same level of efficiency and stability for different users.

Can we, instead of making different users adapt to one system, make a flexible and customizable interface to adapt to different users?

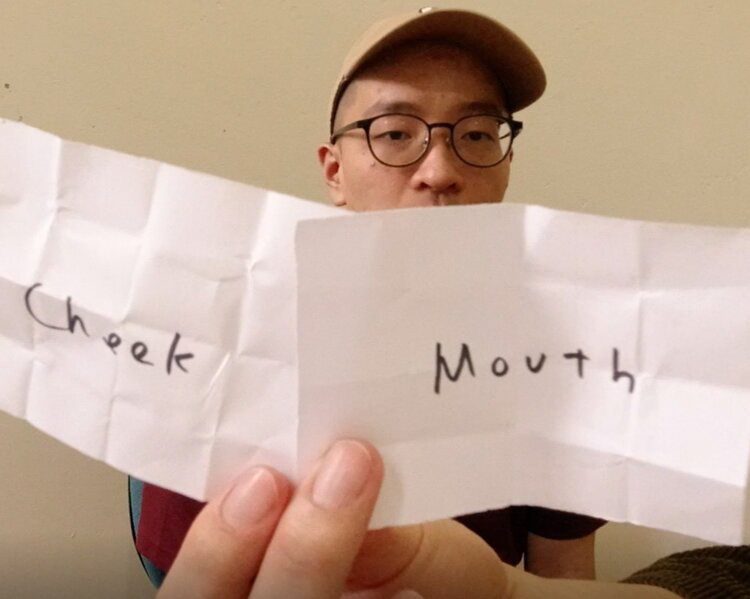

To explore the possibility of an inclusive natural user interface for people who have physical disabilities, several experiments were conducted.

The goal of these experiments is to extract a full body movement-based interaction pattern for the spatial interaction.

We gave participants four 3D object manipulation tasks and set different limitations of using their bodies.

#Two-points System

From the observed behaviour in the experiment, we found that all the interactions could be described as the relative motion of two points in 3D space:

Selection - Two points quickly approaching each other.

Positioning - One point keeps still and the other moves.

Scaling - Two points leave or approach each other at the same time.

Rotation - Two points rotate around the centre point.

Positioning - One point keeps still and the other moves.

Scaling - Two points leave or approach each other at the same time.

Rotation - Two points rotate around the centre point.

We were excited to find that, even the participants were signed different body parts, there is a clear pattern of using the related motion of two body parts to represent intentions, which follows the rule of the two-points model.

Product

Dots is an inclusive interface for future spatial computing, which empowers the disabled people to design their way to interact with MR and IoT based on their body conditions.

One set contains two dots and one wireless charging base.

We use two IMU sensors and Bluetooth module to calculate the relative motion between two motion trackers.

#Patent pending

#High Adaptability

Depending on users body conditions, they can attach two dots to any of their body parts, as long as these two parts can accomplish at least one interaction patterns. It is also possible to utilise the environment like attaching one dot on the table. And the exact attaching points depend on the specific task that users wish to do.

#Design your own interaction

By connecting Dots with Mixed Reality and Internet of Things devices, users can accomplish multiple tasks using their bodies. We empowered everyone, especially disabled people, to interact with future technologies by letting them design their customized interaction ways based on their body conditions and their specific situations.

Smart Lighting Controlling

Wheel chair controlling

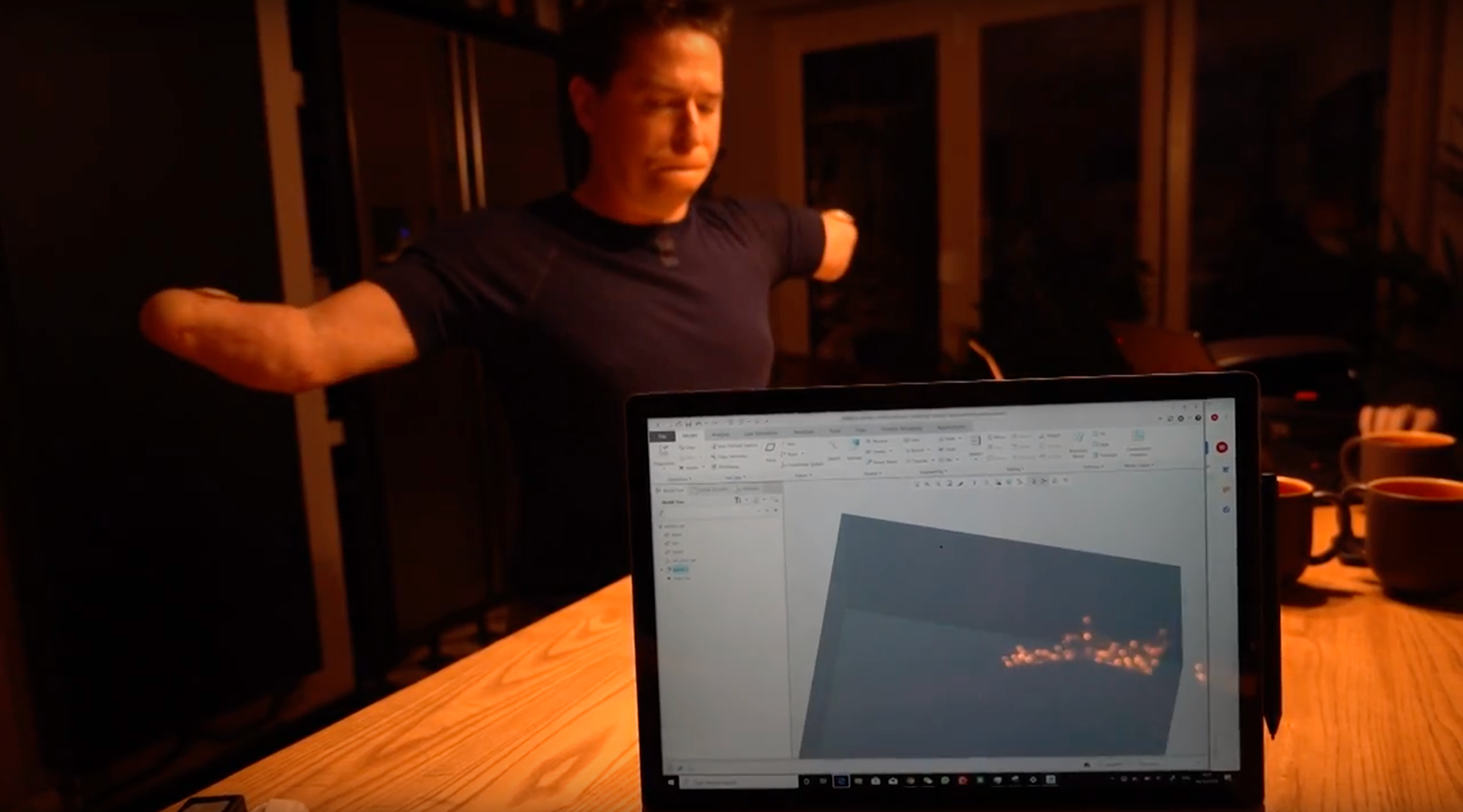

3D Model Munipulation

Typing